Peer connection with 2 video sources

🎻 June 2022 fiddle

Want to learn more about WebRTC?

Look us up here for the WebRTC Fiddle of the Month, created… once a month.

Or just enroll to one of our excellent WebRTC training courses.

Resources

- The fiddle: https://jsfiddle.net/fippo/a1ysuh85/31/

- Sender priority: https://w3c.github.io/webrtc-priority/

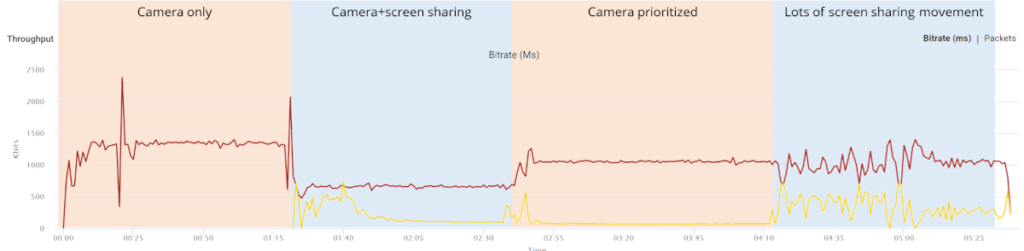

That demo Philipp did during this session? We took the webrtc-internals dump file from it, uploaded it to testRTC and this is what we’ve seen:

Transcription

Tsahi: Hi, and welcome to the WebRTC Fiddle of the Month. With me, Philipp Hancke, and this time, we’re going to talk about the single peer connection with two video sources. And what happens there. Phillip, do you want to explain a bit what we’re going to look at?

Philipp: Yes, we’ve seen a lot of questions recently about whether you should use one peer connection or two connections when you share the camera and screen at the same time. And I did a fiddle and said, it turned out to be very interesting, because we can see a lot of interesting behavior.

Tsahi: Okay, before that, if you remember, like five or six years ago, even more, I wrote something about the need of a single peer connection within your application. And the reason for that is that you want to let WebRTC know what is going on. And you want to have less resources spent around overhead than you want around the actual content. Now, what we’re saying now is slightly counterintuitive, I think because we’re saying, you might want to reconsider that when you are going to send outgoing data for two separate video sources within the same call.

Philipp: Yes, I mean, in general, you want to avoid 2 peer connections, because that means race conditions going on all the time. And more importantly, you have the two boundless estimators fighting each other. That’s the worst case scenario.

Tsahi: Let’s use one peer connection. And then it’s the same call anyway so WebRTC can understand everything because we tell him everything in that single peer connection. And let’s put there both the camera and the screen sharing content, right?

Philipp: Yes.

Tsahi: Okay. So let’s see what happens.

Philipp: I’m going to start the camera already, it’s not visible on the screen, but I want to let it run for a few seconds while I explain it. So we have a pretty standard HTML snippet. It has the graph libraries from the references samples, it has two graphs for the bitrate, the overall bitrate and the bandwidth estimation. And the second graph is for the per sender, outgoing bitrate. And then we have the fiddle itself, I’m going to minimize the HTML part because it’s boring, it creates two peer connections on the click of start button, it gets the camera with the sandwich recipe resolution, and then it negotiates. And it adds a bandwidth restriction of 1.5 megabits per second by adding a b=AS line to the remote SDP. So that is easy. And we can see here, it ramps up nicely to slightly less than 1.5 megabits per second.

Tsahi: And what we have is 2 peer connections connecting to each other. What you did up until now is connect the camera over that. And in the peer connection, the only thing that we’ve done is limited the bitrate to 1.5 megabits per second.

Philipp: Yes.

Tsahi: Okay.

Philipp: In practice, you will see some limitation by the network often. So now we’re going to add the screen to that. And we need to do that by calling get display media with video ads to track and still do the restriction of 1.5 megabits per second. And when we do that, I’m going to share the same tab here, and that is relatively static content. So the encoder is going to produce a keyframe and then it’s going to produce very low bitrate data frames. And what we see here is that the camera dropped from 1.5 megabits to 750 kilobits per second. So what we see is that the bandwidth of 1.5 megabits that is available is divided evenly between the two tracks or sources.

Tsahi: Okay, so the WebRTC Peer Connection said, we have 1.5 megabits per second, I’ve got the camera and screen sharing. 2 video sources that I need to send. The easiest way for me to do is to take 1.5 divided by two, that’s 750 each, then I’m going to give 750 kilobits per second for the webcam and for the screen sharing.

Philipp: Yes. What actually happens is different because as you can see, here’s a blue line. The static… the content is static. So it doesn’t even use the 1.5 or 750 kilobits per second that it has available.

Tsahi: Well that is because it has nothing to encode. It’s the same boring static page unless you move your head or your fingers.

Philipp: Yes, and but that means we have needlessly reduced the camera bitrate by 750 kilobits per second.

Tsahi: Okay, so although the peer connection knows it all, has everything. He looked at the camera says, well, that’s 750, he looked at the screenshot that 750. But he wouldn’t say, well, the screenshot didn’t use everything I can increase the camera.

Philipp: Yes.

Tsahi: Okay.

Philipp: Now there is a way to control this split a bit. This is a thing called priority for the senders, and that got moved out of the main represent specification. So I wasted an hour trying to find it, even though it’s implemented in Chrome. And so that’s what happens when we check the prioritize camera button here. In Code, what happens is we call the sender’s get parameters and set the priority to either high or low, which is a default. And now we can see the camera ramped up to one megabit per second.

Tsahi: And that’s because we prioritized it.

Philipp: Yes.

Tsahi: So in this case, it got two thirds of the bitrate in terms of the budget that it has.

Philipp: Yes.

Tsahi: But it’s still lacks the thing.

Philipp: Yes. Now, the problem with the whole screen sharing or tap sharing is that the bitrate is low at the moment. But what if I start scrolling up and down a lot? And we will see, hopefully, that the bitrate is and it does that it is wrapping up quite a bit and it dropped in response.

Tsahi: It does, but it still doesn’t go and give it more room, although your video that gets recorded is lower resolution for the webcam and full screen for the actual screen share?

Philipp: Yes.

Tsahi: Okay.

Philipp: And if you look at this, the camera bitrate is fluctuating a lot. So it is not an ideal situation. And this gets more tricky if you have actually moving content.

Tsahi: So in a single peer connection, it will be almost impossible for us or for WebRTC to take data that it knows and optimize for the actual type of content that what you as a developer wants to convey in terms of the priorities that you have for the channels?

Philipp: Yes, I mean, you can certainly spend more effort on it, but nobody has done some of that so far. And for example, Google Meet uses 2 peer connections.

Tsahi: Yep. That was the next thing that it was going to say. Because Google Meet uses these 2 peer connections, you should do as well, because that’s where the optimization and focus is in terms of the implementation of the WebRTC library today.

Philipp: Yes, I mean, what will happen then is that maybe the then estimators fight a bit, but that is a fight that is actually going to produce better results than the naïve, just split into two approach.

Tsahi: So for multiple video sources, we would like to use multiple peer connections and likely we’ll end up with 2 peer connections there. And for all incoming streams, media streams, we’re fine or we prefer a single per connection because that allows us to optimize the bandwidth better for that type of scenario for that type of streams.

Philipp: Yes.

Tsahi: Okay. Thank you, Philipp and see you next month in our next fiddle of the month.

Philipp: Yes, bye.

Tsahi: Bye.